RL in the Age of LLMs#

TLDR

This is a “home-recipe guide” to RL for LLMs:

Written for individuals looking to get started in an afternoon.

Simplifying the complexities of research papers and production grade code.

Everything in this guide runs on self contained minimal code, using low-computation-cost examples.

All code is in a self contained python package

Everything runs within 30 minutes on a midrange MacBook or equivalent.

This is best read interactively:

Run the code to learn how fast, unstable, and involved the training routines are.

Use an LLM assistant to read this guide and the code, combining all the sources to answer your specific questions.

A Home Cook’s Guide to RL#

Learning to bake a cake is a fun afternoon’s activity, and this is the vibe of this section of the guidebook. Each tutorial is a self contained recipe with an entire implementation of the algorithm and a verbal explanation, all built on an LLM you can train yourself – on a laptop.

Fig. 22 Generated by Gemini Nano Banana which is trained in part by Reinforcement Learning.#

This section contains:

RL algorithms such as PPO, GRPO, DPO

Every piece of terminology is introduced in this guide and runs on a single laptop, so we can keep this guide largely self contained and minimal. Here’s how it contrasts with other sources out there.

From Research Papers#

Imagine trying to learn how to bake a cake by reading Water Absorption Capacity Determines the Functionality of Vital Gluten Related to Specific Bread Volume. Research papers are important, but they are written for other researchers, not practioners looking to learn fundamentals.

From Production Code Libraries#

There are already great code libraries for RL out there, but they’re written for computational efficiency at scale. This means the core ideas are abstracted.

Fig. 23 Not the best place to learn how to bake a loaf of bread.#

It’s like trying to learn how to bake a loaf at a production volume bread factory – good luck separating the bread recipe from all the considerations made for large scale baking! And you’ll waste a lot of money if you’re learning by tweaking the formula in this setting.

From General Reinforcement Learning Guides#

General reinforcement learning guides cover the basic that apply to all recipes, bread, pies, cakes, pizzas, basically anything that is baked. You should still read them, but here we want to focus on just how RL is applied to modern LLMs.

Who Should Read this and Why#

This is for three types of people:

Those curious about how RL shapes LLMs and want to see how the sausage is made, not just read a text description.

Applied folks training LLMs that need a deeper understanding of how to use existing LLM libraries and interpret their outputs.

Future LLM RL developers and researchers that want a bridge to the more complicated papers and code out there.

The challenge is learning LLM RL is the ideas are spread across decades of research and many domains. LLM specific libraries are often production grade, which means the core logic is hidden behind APIs, or designed to optimize computational throughput, and not written for human learning.

Our Learning Trajectory#

All of this is why I wrote this practitioner’s guide to RL for LLMs. I showcase the most widely used RL algorithms for LLM post training, that actually shape LLMs’ behavior.

We’ll cover the absolute basics RL with IceMaze to ensure the fundamentals are understood, and then we’ll start implementing RL on a tiny LLM. Papers and YouTube videos have their place, but they give you the end product, without the messy intermediate parts (which actually prepresent the majority of the actual work). By contrast, actually playing with working code yourself will give you the deepest understanding.

What Is Omitted#

To make this a home cooking guide, here’s what we left out:

Heavy Mathematical Notation - While symbolic notation is necessary for detailing algorithms in papers, we instead use code to detail the algorithms, and good ol’ text to provide intuitive explanations.

Distributed Training Code and Batching - Libraries that implement RL often use many layers of abstraction for code reuse and multiple computational tricks, such as batching, or approximations for speed, or distributed implementations that are well suited for long running production jobs. This obscures the mathematics and fundamentals of the RL ideas though. This guide provides single file implementations of every algorithms, with the core often being 30 lines or less.

General Instruction Tuned “Chat Grade” LLMs - Chat Grade general LLMs are large and admittedly come with additional training complexity. In this guide, we’ll build our own completion LLM. Granted, it won’t cover every use case or pitfall of RL training, but it will be enough to show you the basics and equip you with the skills and confidence to customize your own models.

How to Read this Guide#

Again, this guide is meant to be more than a static set of words and code files. Take the content here as a guide and make it your own.

In particular:

Run the code with a debugger - When running code, you can inspect things like shapes or intermediate values. This will give you deeper sense of what is happening. Install using uv or your favorite package manager.

Install by running

uv pip install "git+https://github.com/canyon289/GenAiGuidebook.git@main#subdirectory=genaiguidebook/clean_llm_rl"

Modify the examples - Change the data or training yourself. This will allow you to see the effect of different configurations, and as a learner you’ll be more likely to remember your examples than mine!

Reference source papers and production grade code examples - These will contain additional details that will be easier to understand once you have the fundamentals.

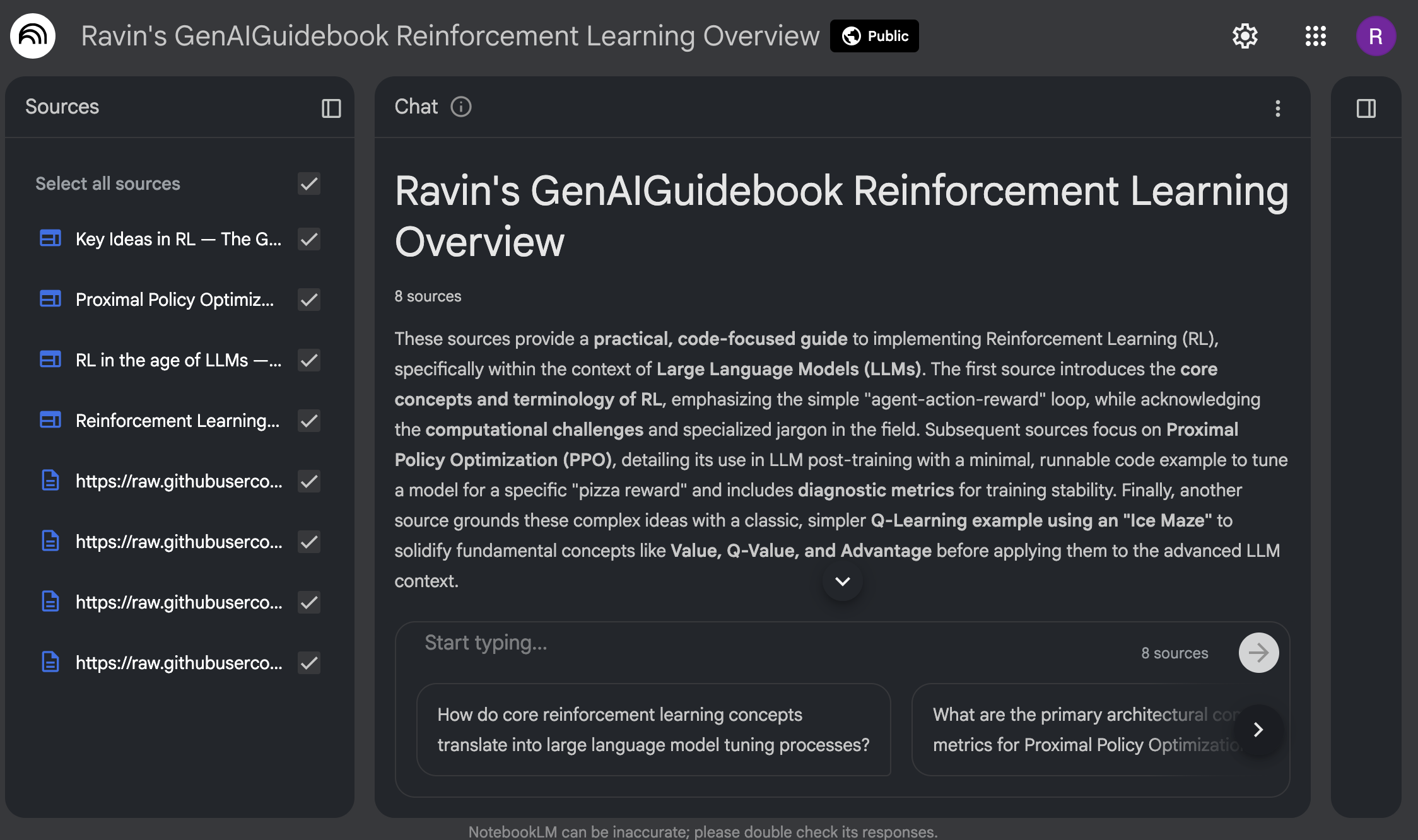

Use NotebookLM and other LLMs#

Using NotebookLM, you can read, listen, watch, generate flash cards, or even quiz yourself on the content! I’ve preloaded a NotebookLM instance for you. I encourage you to also add in your own sources and notes, such as the references provided throughout this guidebook.

Fig. 24 A NotebookLM preloaded with this RL Guidebook to speed up your learning.#

I also provide suggested prompts in each section. These prompts are meant to help you find supporting information for the content, or push your understanding one step further. More holistically though, they are encouragement for you to read beyond this guidebook into the areas that are of most interest to you.

Contributions Welcome!#

If you see any issues or have suggestions open an issue or pull request!

Suggested Prompts#

What is the history of RL, and how does it intersect with language models?

What are some additional references for how RL is applied to large language models?

References#

These are the more concise references for the field as a whole that I could find. If you’re looking to dive in more deeply, I recommend these rather than piecing the knowledge together yourself.

General RL#

Reinforcement Learning, a second edition - If you want to learn the general basics of RL, Sutton and Barto’s book covers the fundamentals of reinforcement learning and is often cited in RL discussions.

OpenAI Guide to RL - Written pre-LLM, it contains a good mix of practical advice on how to learn RL, as well as explanations for key algorithms.

Reinforcement Learning Overview - Kevin Murphy’s overview of the general modern RL space beyond just LLMs. It’s been updated as recently as 2025.

LLM Specific#

Understanding Reinforcement Learning for Model Training - This is an LLM-specific RL overview from Rohit Patel, which contains equations but no code. If you need a general understanding without code, this is great.

Unsloth Guide to RL - The Unsloth folks build the most technically deep (and practical) content on the topics of model training, including RL. All their guides contain everything you need to DIY with fast implementations across a family of models.

OLMO Open Instruct/TULU Codebase - The full recipe from the AI2 team showing the details of what it takes to RL a frontier level LLM.

Written On My Own#

As with the rest of the guidebook this is written entirely on my own without involvement from any other organization.