Tutorial - Image Tokenization and Reconstruction#

TL;DR

We will be using the TiTok model for image tokenization and reconstruction.

Key features of TiTok include:

1D tokenization

Efficiency

Competitive performance

Scalability

Applications of TiTok include:

Image generation

Image reconstruction

Computer vision tasks

We will demonstrate the use of TiTok for image tokenization and reconstruction in Python.

Understanding TiTok: The Transformer-based 1-Dimensional Tokenizer#

TiTok is an innovative model designed for image tokenization, addressing the limitations of traditional 2D tokenization methods. By transforming images into 1D latent sequences, TiTok significantly enhances the efficiency and effectiveness of image representation, particularly in generative tasks.[^ref1]

The tokens generated by TiTok are Hard Tokens, which are discrete and non-differentiable. This approach allows for more efficient processing and storage of image data, making it ideal for various applications in computer vision and generative modeling.

Key Features of TiTok#

1D Tokenization: Unlike conventional methods that utilize 2D latent grids (like VQGAN), TiTok reduces images into a compact 1D sequence of tokens. This approach minimizes redundancy by effectively capturing the similarities present in adjacent regions of an image.

Efficiency: A typical image of size 256 x 256 x 3 can be represented using just 32 discrete tokens with TiTok, compared to the hundreds or thousands of tokens generated by previous techniques. This compact representation leads to reduced computational demands and faster processing times.

Competitive Performance: Despite its smaller token count, TiTok achieves performance metrics comparable to state-of-the-art models. For instance, it has demonstrated a gFID score of 1.97, outperforming the MaskGIT baseline by 4.21 at the ImageNet 256 x 256 benchmark.

Scalability: The advantages of TiTok become even more pronounced at higher resolutions. It maintains its efficiency and effectiveness when applied to larger images, making it a versatile choice for various applications in image generation and reconstruction.

Applications of TiTok#

TiTok’s unique capabilities make it suitable for a range of applications:

Image Generation: By generating high-quality images from minimal latent representations, TiTok can be integrated into generative adversarial networks (GANs) and other frameworks.

Image Reconstruction: Its ability to reconstruct images from a compact set of tokens allows for efficient storage and transmission of visual data.

Computer Vision Tasks: TiTok can enhance various computer vision tasks by providing a more effective way to represent and manipulate image data.

In summary, TiTok represents a significant advancement in image tokenization techniques, offering a more efficient and effective approach compared to traditional methods. Its ability to reduce images to a minimal number of tokens while maintaining competitive performance makes it an exciting tool for researchers and practitioners in the field of computer vision and generative modeling.

Now, let’s put this model to the test and see how it performs in practice!

Prerequisites#

First, you need to clone the repository from the ByteDance GitHub page. You can do this by running the following command in your terminal:

%%bash

git clone https://github.com/bytedance/1d-tokenizer.git tokenizer

Cloning into 'tokenizer'...

Then, navigate to the 1d-tokenizer and install the required packages by running the following commands:

%%bash

cd tokenizer

pip3 install -r requirements.txt

Also, make sure you have the following libraries installed:

torchnumpyPIL(Python Imaging Library)huggingface-hub(for downloading models)

You can install the required libraries using pip:

%%bash

pip install torch torchvision pillow huggingface-hub omegaconf

Import Required Libraries#

Start by importing the necessary libraries. Open your environment or Jupyter Notebook and execute the following code:

%%bash

cd tokenizer

import os

os.chdir('tokenizer')

import demo_util

import numpy as np

import torch

from PIL import Image

from huggingface_hub import hf_hub_download

from modeling.maskgit import ImageBert

from modeling.titok import TiTok

import matplotlib.pyplot as plt

from pprint import pprint

Load Pretrained Models#

You can load pretrained models from Hugging Face or download them locally. Here’s how to do both:

Load from Hugging Face#

titok_tokenizer = TiTok.from_pretrained("yucornetto/tokenizer_titok_l32_imagenet")

titok_generator = ImageBert.from_pretrained("yucornetto/generator_titok_l32_imagenet")

Option 2: Download Locally#

If you prefer to download files directly, use:

hf_hub_download(repo_id="fun-research/TiTok", filename="tokenizer_titok_l32.bin", local_dir="./")

hf_hub_download(repo_id="fun-research/TiTok", filename="generator_titok_l32.bin", local_dir="./")

Set Random Seed and Device#

Setting a random seed ensures reproducibility. Choose the device based on your hardware (CPU or GPU):

torch.backends.cuda.matmul.allow_tf32 = True

torch.manual_seed(0)

device = "cuda" if torch.cuda.is_available() else "cpu"

titok_tokenizer.to(device)

titok_generator.to(device)

ImageBert(

(model): BertModel(

(embeddings): BertEmbeddings(

(word_embeddings): Embedding(5098, 768)

(position_embeddings): Embedding(33, 768)

(token_type_embeddings): Embedding(2, 768)

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.1, inplace=False)

)

(encoder): BertEncoder(

(layer): ModuleList(

(0-23): 24 x BertLayer(

(attention): BertAttention(

(self): BertSdpaSelfAttention(

(query): Linear(in_features=768, out_features=768, bias=True)

(key): Linear(in_features=768, out_features=768, bias=True)

(value): Linear(in_features=768, out_features=768, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

)

(output): BertSelfOutput(

(dense): Linear(in_features=768, out_features=768, bias=True)

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.1, inplace=False)

)

)

(intermediate): BertIntermediate(

(dense): Linear(in_features=768, out_features=3072, bias=True)

(intermediate_act_fn): GELUActivation()

)

(output): BertOutput(

(dense): Linear(in_features=3072, out_features=768, bias=True)

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.1, inplace=False)

)

)

)

)

(lm_head): Linear(in_features=768, out_features=4096, bias=True)

)

)

Prepare Configuration#

Load the configuration for TiTok models:

config = demo_util.get_config("configs/infer/titok_l32.yaml")

print(config)

{'experiment': {'tokenizer_checkpoint': 'tokenizer_titok_l32.bin', 'generator_checkpoint': 'generator_titok_l32.bin', 'output_dir': 'titok_l_32'}, 'model': {'vq_model': {'codebook_size': 4096, 'token_size': 12, 'use_l2_norm': True, 'commitment_cost': 0.25, 'vit_enc_model_size': 'large', 'vit_dec_model_size': 'large', 'vit_enc_patch_size': 16, 'vit_dec_patch_size': 16, 'num_latent_tokens': 32, 'finetune_decoder': True}, 'generator': {'model_type': 'ViT', 'hidden_size': 768, 'num_hidden_layers': 24, 'num_attention_heads': 16, 'intermediate_size': 3072, 'dropout': 0.1, 'attn_drop': 0.1, 'num_steps': 8, 'class_label_dropout': 0.1, 'image_seq_len': '${model.vq_model.num_latent_tokens}', 'condition_num_classes': 1000, 'randomize_temperature': 9.5, 'guidance_scale': 4.5, 'guidance_decay': 'linear'}}, 'dataset': {'preprocessing': {'crop_size': 256}}}

Load the Image#

Load an image from the specified file path. For this tutorial, I’m using a sample image of a cat, but you can use any image you like. For example, you can download images from the COCO dataset.

image_path = "../../images/test_image_1.png" # path to the image you want to tokenize

original_image = Image.open(image_path)

# Resize the image to 256x256

# original_image = original_image.resize((256, 256)) # Resize the image to 256x256 if it's not already

print(original_image.size)

display(original_image)

(256, 256)

Preprocess the Image#

The code below converts an image into a PyTorch tensor with the shape [1, Channels, Height, Width] and normalizes the pixel values to the range [0, 1]. This tensor is now ready for input into a PyTorch model for further processing or inference.

image = torch.from_numpy(np.array(original_image).astype(np.float32)).permute(2, 0, 1).unsqueeze(0) / 255.0

image.shape

torch.Size([1, 3, 256, 256])

Tokenization#

Now we can tokenize the preprocessed image.

encoded_tokens = titok_tokenizer.encode(image.to(device))[1]["min_encoding_indices"] # Extract the minimum encoding indices

encoded_tokens.shape

torch.Size([1, 1, 32])

As you can see, the image is tokenized into just 32 tokens! We can print the tokens

pprint(encoded_tokens)

tensor([[[2931, 2500, 2713, 432, 3197, 531, 3748, 2074, 533, 3694, 1659,

2498, 1346, 2523, 1638, 2947, 1907, 2265, 1857, 3371, 1638, 2109,

327, 2615, 3040, 146, 3507, 3215, 3454, 1822, 234, 2252]]])

Reconstruction#

Now, let’s reconstruct the image from the tokens. First we’ll decode the tokens into a tensor, then convert the tensor into a numpy array, and finally display the image.

reconstructed_image = titok_tokenizer.decode_tokens(encoded_tokens) # Decode the tokens back to a tensor

reconstructed_image.shape

torch.Size([1, 3, 256, 256])

Post-Processing of Reconstructed Image#

reconstructed_image = torch.clamp(reconstructed_image, 0.0, 1.0)

reconstructed_image = (reconstructed_image * 255.0).permute(0, 2, 3, 1).to("cpu", dtype=torch.uint8).numpy()[0]

reconstructed_image.shape

(256, 256, 3)

Convert Back to PIL Image#

Convert the NumPy array back into a PIL Image for display purposes.

reconstructed_image = Image.fromarray(reconstructed_image)

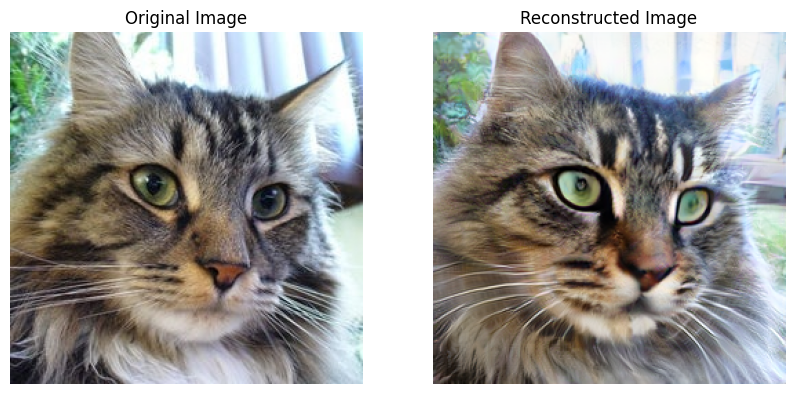

Display Original and Reconstructed Images#

Print details about both images and display them.

plt.figure(figsize=(10, 5))

plt.subplot(1, 2, 1)

plt.title("Original Image")

plt.imshow(original_image)

plt.axis('off')

plt.subplot(1, 2, 2)

plt.title("Reconstructed Image")

plt.imshow(reconstructed_image)

plt.axis('off')

plt.show()

Despite using a compact set of tokens, the reconstructed image retains significant detail and closely resembles the original. This demonstrates TiTok’s capability to efficiently encode and decode images while preserving essential features, making it a powerful image tokenization and reconstruction tool.

Conclusion#

In this tutorial, you learned how to tokenize an image into discrete tokens using the TiTok model and reconstruct it back to its original form. You also learned how to display the original and reconstructed images side by side.

Feel free to modify the code and experiment with different images or configurations!

References#

An Image is Worth 32 Tokens for Reconstruction and Generation - Official TiTok Project Page