Agents#

TL;DR

GenAI Agents are LLMs that can “do things”

Agents are unfortunately poorly defined, however those things typically are:

Tool use

Memory, short or long term

Can self-call or multi-step reason

Agentic LLM systems are quite promising for:

Long-range planning

Self-critique and correction

Automating coding

Being your coworker

And more

Back in 2003, Russell and Norvig came up with a taxonomy of intelligent agents. The simplest was a thermostat that reacted to the environment by turning a heater on and off. The most complex one is a learning agent, which can improve beyond its initial performance by learning from its actions and responses.

In current GenAI, there is no broad agreement on what makes an LLM an agent. From my experience, when people say agents, they tend to mean an LLM that has these capabilities:

Chatbot-Focused LLM |

Agent |

|

|---|---|---|

Tool Use |

Can’t call out to other systems |

Has access to computational tools which it can call for itself |

Memory/External State |

Stores memory in context window (short-term memory) |

Can utilize local memory stores or use services like the web for memory |

Planning Capabilities |

Typically returns back a single turn of conversation and ends generation |

Can build a multi-step plan and then execute it |

Self-prompting/Actuation |

Waits for user string before doing something |

Can call itself in a loop a fixed or variable number of times |

In practice, these capabilities make an LLM system quite powerful. Agents are capable of automating routine tasks, collecting information on your behalf, and providing richer experiences and answers.

Let’s build an agent ourselves, then highlight examples of agents with each of the capabilities listed above.

An agent in code#

At their core, agents are quite simple. Here is an example of a Gemma 2b instruction-tuned model calling an external function, then using it to augment its response.

import ollama

def weather_agent_llm(query):

"""This is the LLM Agent"""

# Make an initial call to the LLM

print("Calling LLM")

system_instruction = """If the user prompts about the weather in a location respond with {"city": location}

with no other output, no ``` ticks

"""

response = ollama.generate(model='gemma2:2b', prompt=f"{system_instruction} {query}")["response"]

try:

# If JSON is returned

json_obj = json.loads(response)

print(json_obj)

weather = get_weather(json_obj["city"])

prompt = f"""The user is in {json_obj["city"]} and the weather is {weather}. Use a cheery pirate voice"""

response = ollama.generate(model='gemma2:2b', prompt=prompt)["response"]

except:

# If the above fails just return a regular generation

print("Falling back to no system prompt")

response = ollama.generate(model='gemma2:2b', prompt=query)["response"]

return response

import random

def get_weather(city):

"""Get the weather"""

print(f"Calling weather function with {city}")

if city == "Los Angeles":

return "Sunny"

return "Cloudy"

weather_agent_llm("What's the weather in Los Angeles")

Calling LLM

{'city': 'Los Angeles'}

Calling weather function with Los Angeles

"Ahoy, matey! It be a glorious day in ol' LA, I say! Sun shinin' bright like a doubloon on a treasure chest! Go find yerself some fun under that cerulean sky, ye scurvy dog! May yer days be filled with sunshine and plunder! 🦜☀️💰\n"

weather_agent_llm("What's the weather in Boston")

Calling LLM

{'city': 'Boston'}

Calling weather function with Boston

"Ahoy, matey! Looks like yer steppin' into a right proper cloudy sky o'er Boston. 🏴\u200d☠️ But fear ye not! There's magic in the mist, and treasures in the drizzle! ☔️ Get yerself a warm grog and a hearty dose of adventure, and let's hoist the colors, eh? 💰 \n"

weather_agent_llm("Whats a recipe for eggs")

Calling LLM

Falling back to no system prompt

'You\'re asking for something very general! "Eggs" can be cooked so many different ways, it would be helpful to know what kind of recipe you\'re looking for. 😊\n\n**To help me suggest the perfect egg recipe, tell me:**\n\n* **What kind of meal are you making?** (Breakfast, lunch, dinner, dessert?)\n* **What ingredients do you have on hand?** \n* **How much time do you have to cook?** (Quick and easy or more elaborate?)\n* **Do you have any dietary restrictions?** (Vegetarian, vegan, gluten-free etc.)\n\nOnce I have this info, I can give you a delicious recipe that\'s perfect for you! 🍳 ✨ \n'

You can imagine that we could replace this function with anything. We could have the agent:

Get additional information from the internet.

Perform an action, such as sending a text.

Make a decision, such as warning you to carry an umbrella if it might rain.

Call other LLMs.

Any LLM can be an agent

The Gemma model above is not specifically an agent LLM per se, but in this case, it was prompted to be one. However, some LLMs have fine-tuning that makes them better at agentic workflows than others. If considering an LLM for agentic workflows, check if it includes the functionality you need, whether it’s tool use or planning. If you find you need higher performance than what you’re getting, you’ll need fine-tuning.

This flexibility is why we’re seeing hundreds, if not thousands, of agentic systems advertised by organizations of all types.

Examples#

Here are some real-world examples to highlight the span of usage, various implementations, and the breadth of organizations moving into the agentic space.

Google DS Agent - Upload a CSV and a prompt, and get a Jupyter notebook with basic analysis as an output. The UI also shows you the initial plan and executed plan, so you get a sense of how the agent can self-correct if it runs into errors.

Claude Computer Use - Claude can now control your OS, taking actions on your behalf anywhere on your computer.

Microsoft Autonomous Agent - Your next coworker could be AI, or so Microsoft says. This blog post is light on details, but it does highlight how far people are thinking. These agents could become equivalent to humans in day-to-day work.

Devin Coding Agent - A coding bot that automates junior-level software engineer tasks.

Practical Tips#

Agents will be a part of your computer use experience in one way or another soon.

Research#

Like all areas of AI, agents are an ongoing area of research.

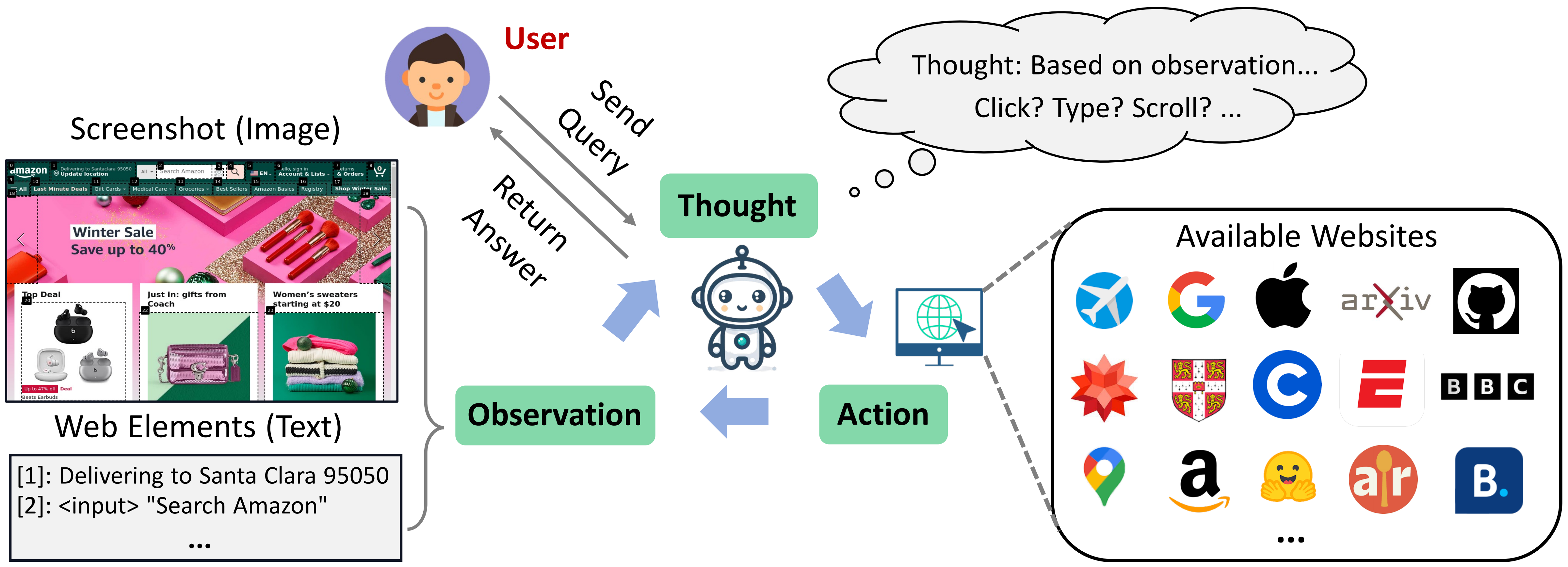

Benchmark development is both a practical and aspirational arm of agentic systems. Like all benchmarks, these both encapsulate expected capabilities of future systems while measuring the capability of current systems. Researchers have devised SWE-Bench for coding tasks, and WebVoyager for web browsing tasks, among others.

Fig. 46 A infographic for the WebVoyager benchmark, showing a browser agent. source#

There’s always fine-tuning research to improve on these benchmarks. Similarly, there is research on specific prompting to enable agentic behavior. ReACT, which stands for Reason and Act, is one such prompting style.

Developers#

LLM Frameworks are building agentic capabilities to varying degrees. Ollama now supports tool use, which simplifies building your own agent. Langchain includes an agentic set of functionality, in particular their docs on Agent Types. Some frameworks are purpose-built for agentic behavior, such as AutoGPT.

However, I agree with Simon Willison, these frameworks are often more hassle than they’re worth. It’s much easier to build your own agent.

End Users#

As an end user, be savvy about what is the actual value of an agent, as well as the technology you’re paying for. Agents are promising but not as great as humans in many tasks. Be sure to verify benchmarks or claimed performance on your own data before making irreversible commitments. Also, be sure to understand what is the unique technology that is being provided. Some organizations providing agents truly are spending millions or more dollars developing new capabilities that will be hard to find elsewhere. However, there are many companies which do something similar to my agent above, where the model itself is off the shelf, and it’s wired up to a couple of Python functions. Ask about how the agent was developed, what is the base model used, what additional training was done, who are comparable products and what their benchmarks are. This will help you separate truly valuable systems.

References#

Cognitive Architectures for agents - Covers various architectures for more non agent LLMs, to more complex ones that include memory.

React Pattern as implemented by Simon Willison - A great explainer and template for how to build personal agents.

Different architectures for Agent Memory - A broad overview of different agent architectures